|

Currently a PhD student at TU Vienna under professor Dongheui Lee, focusing on reinforcement learning for robotics applications. Previously a research engineer at UC Berkeley advised by professor Sergey Levine. Experienced robotics software engineer with a strong background in motion planning, robot perception, and deep reinforcement learning. Skilled in designing and implementing high-quality software for robotic automation systems. Proven ability to work closely with customers, scope projects, and lead development teams towards successful production deployments. |

|

|

I'm interested in deep reinforcement learning for robotic manipulation, transfer learning in robotics, and efficient skill acquisition and generalization in autonomous systems. Much of my research revolves around inferring the physical world for robotic control. |

|

Homer Walke, Jonathan Yang, Albert Yu, Aviral Kumar, Jędrzej Orbik, Avi Singh, Sergey Levine, Conference on Robot Learning (CoRL), 2022 RSS Workshop on Learning from Diverse, Offline Data, 2022 arXiv / website We demonstrate that incorporating prior data into robotic reinforcement learning enables autonomous learning, substantially improves sample-efficiency of learning, and enables better generalization. Our method learns new robotic manipulation skills directly from image observations and with minimal human intervention to reset the environment. |

|

Charles Sun*, Jędrzej Orbik*, Coline Devin, Brian Yang, Ahbishek Gupta, Glen Berseth, and Sergey Levine Conference on Robot Learning (CoRL), 2021 arXiv / blog post / website / code We propose a reinforcement learning system that can learn mobile manipulation tasks continuously in the real world without any environment instrumentation, without human intervention, and without access to privileged information, such as maps, objects positions, or a global view of the environment. |

|

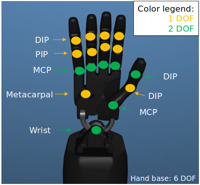

Jędrzej Orbik, Dongheui Lee, Alejandro Agostini, IEEE International Conference on Development and Learning (ICDL), 2021 paper / website / source code We identify that the learned rewards using existing IRL approaches are strongly biased towards demonstrated actions due to the scarcity of samples in the vast state-action space of dexterous manipulation applications. In this work we use statistical tools for random sample generation and reward normalization to reduce this bias. We show that this approach improves learning stability and robustness of policies learned with the inferred reward. |

|

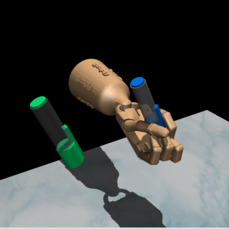

Jędrzej Orbik, Shile Li, Dongheui Lee, 18th International Conference on Ubiquitous Robots (UR), 2021 paper / website We propose a low-cost framework to map the human hand motion from a single RGB-D camera to a dexterous robotic hand. We incorporate neural network pose estimation and inverse kinematics for real-time hand motion retargeting. Empirically, the proposed framework can successfully perform grasping task imitations. |